Checking out k3s and Ubuntu Server 2020.04 Part 1

By EricMesa

- 5 minutes read - 940 wordsAs I’ve been working on learning server tech, I’ve gone from virtualization to Docker containers and now Podman containers and Podman pods. The pod in Podman comes from a view towards Kubernetes. I moved to Podman because of the cgroupsv2 issue in Fedora 31 and so I figured why not think about going all the way and checking out Kubernetes? Kubernetes is often stylized as k8s and a few months back I found k3s, a lightweight Kubernetes distro that’s meant to work on edge devices (including Raspberry Pis!). For some reason (that I don’t seem to find on the main k3s site), I got it in my head that it was better tailored to Ubuntu than Red Hat, so I decided to also take Ubuntu Server 2020.04 for a spin.

While one of my cloud servers runs Ubuntu, I didn’t have to install it. I just spun it up at my provider. So it’s been a long time since I did an Ubuntu installation. I think the newest ISO I had before 2020 was one of the 2016 Ubuntu ISOs. The server install is VERY slick. Slickest non-GUI installed I’ve ever seen. I’ll have to do a future post about it. I liked that it detected a more up to date installer during the install and offered to download and use THAT installer - negating any potential installer bugs. One of the most interesting parts of the install was when it asked if I wanted to install some of the more popular server apps. The list was quick eclectic and must be from the popularity tool because it even had Sanzbd and I can’t imagine Canonical pushing that on its own.

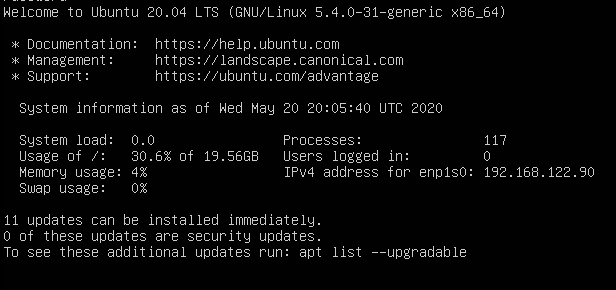

One thing I *am* used to from my Ubuntu cloud server that I loved seeing here is all the great information you get upon login. I wish CentOS or Red Hat would do something similar.

Ubuntu login information

Ubuntu login information

I decided to go ahead with the k3s’ front page instructions under “this won’t take long”:

curl -sfL https://get.k3s.io | sh -

# Check for Ready node, takes maybe 30 seconds

k3s kubectl get node

After a bit, I didn’t time if it was 30 seconds, I got back:

NAME STATUS ROLES AGE VERSION

k3s Ready master 95s v1.18.2+k3s1

OK, looks like I have some Kubernetes ready to rock. I figured the easiest container program I’m currently running in Podman would be Miniflux. I already created a yaml file with:

podman generate kube (name of pod) > (filename).yaml

That command generates the Podman equivalent of a docker-compose.yml file. You can use that yaml to recreate that pod on any other computer. The top of the file it generates says:

# Save the output of this file and use kubectl create -f to import

# it into Kubernetes.

So I’d like to try that and see what happens. Of course, first I have to recreate the same folder structure; in that yaml I’m using a folder to store the data so that it’s easier for me to make backups than if I had to mount the directory via podman commands.

After creating the folder, I transferred over the yaml file. Then I tried the kubectrl create -f command.

sudo kubectl create -f miniflux.yaml

I waited for the system to do something. Eventually I got back the feedback:

pod/miniflux created

Being new to true Kubernetes (as opposed to just Podman pods), I wasn’t sure what to do with this information. But I was happy that it hadn’t simply failed. Taking a look at the documentation for k8s, I learned about the command kubectl get. So I tried

kubectl get pods

NAME READY STATUS RESTARTS AGE

miniflux 0/2 CrashLoopBackOff 75 3h7m

Welp! That doesn’t look good.

Following along on the tutorial I typed

sudo kubectl describe pods

This gave a bunch of info that reminds me of a docker or podman info command. But the key to what was going on was at the end:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Started 33m (x34 over 3h8m) kubelet, k3s Started container minifluxgo

Normal Pulled 28m (x34 over 3h7m) kubelet, k3s Successfully pulled image "docker.io/library/postgres:latest"

Warning BackOff 13m (x739 over 3h5m) kubelet, k3s Back-off restarting failed container

Warning BackOff 3m40s (x772 over 3h5m) kubelet, k3s Back-off restarting failed container

In order to be able to see the pod’s logs:

kubectl logs -p miniflux -c minifluxgo

kubectl logs -p miniflux -c minifluxdb

That’s because the pod had more than one container. Turns out the issue was with the database. It’s complaining about being started as the root user.

(Sidenote: awesomely Ubuntu Server Edition comes with Tmux pre-installed!)

Strangely there doesn’t seem to be a way to restart a pod. The consensus seems to be that you use:

kubectl scale deployment <> --replicas=0 -n service

So I will try that. Apparently that doesn’t work when you create it from podman yamls.

Eventually I decided to try and get underneath k3s. So the replacement for docker or podman in k3s is crictl.

crictl ps

Showed my containers. I thought I had maybe fixed what was wrong with the database. So I tried

ctrctl start (and the contaierid)

Apparently it doesn’t want to do that because the container is in an exited status. This whole thing is so counter-intuitive coming from Docker/Podman land. Then again, when I went to try again, it had switched container IDs because k8s had tried to restart it. So it truly is ephemeral.

Well, that’s all I could figure out over the course of a few hours. I’ll be back with a part 2 if I figure this out.