Below you will find pages that utilize the taxonomy term “Btrfs”

Programming Update: Jan 2023 and Feb 2023

January

January was a relatively light programming month for me. I was focused on finishing up end of year blog posts and other tasks. Since Lastfmeoystats is used to generate the stats I need for my end of year music post, I worked on it a little to make some fixes. The biggest fix was to change the chart titles not to be hardcoded. I didn’t realize it until I was reviewing my blog post, but I had hard-coded the year when I first wrote the code a couple years ago. I also changed the limits on some of the data I was collecting so that I could do more expansive trending for my overall stats.

Programming Update May-July 2022

I started working my way back towards spending more time programming as the summer started (in between getting re-addicted to CDProjektRed’s Gwent).

I started off by working on my btrfs snapshot program, Snap in Time. I finally added in the ability for the remote culling to take place. (My backup directories had started getting a LITTLE too big) I also added in official text log files so that I wouldn’t have to rely on my cronjob log file hack.

My Programming Projects and Progress in 2020

Back in 2019, when I did my programming retrospective I made a few predictions. How did those go?

- Work on my Extra Life Donation Tracker? Yup! See below!

- Write more C++ thanks to Arduino? Not so much.

- C# thanks to Unity? Yes, but not in the way I thought. I only did minor work on my game, but I did start a new GameDev.tv class.

- Learning Ruby? Well, I wouldn’t necessarily say I learned Ruby. I did finish the book Ruby Wizardry and I took copious notes. But until I do some practice - maybe via some code katas, I don’t think I’ll have solidified it in my mind.

- 3D Game Dev? Nope, not really.

- Rust and Go? Not even close. Although I did make sure to get some books on the languages.

So, what happened? On the programming front, I wanted to continue my journey to truly master Python after having used it at a surface level for the past 15ish years. I dedicated myself to doing the Python Morsels challenges (more on that below) and working through various Python development exercises. Outside of programming, the time I had off from COVID was used to play with my kids and they wanted to play lots of video games. So we took advantage of having way more time than usual to do that. So a lot of my goals slipped. We’ll get to 2021 predictions at the end, so let’s take a look at 2020!

Reviving and Revamping my btrfs backup program Snap-In-Time

If you’ve been following my blog for a long time, you know that back in 2014 I was working on a Python program to create hourly btrfs snapshots and cull them according to a certain algorithm. (See all the related posts here: 1, 2, 3, 4, 5, 6, 7, 8, 9) The furthest I ever got was weekly culling. Frankly, life and school contributed a good excuse not to keep going because I had created a huge headache for myself by attempting to figure out the date and cover all the possible corner cases with unit tests. This is what my code looked like in 2014.

Stratis or BTRFS?

It’s been a while since btrfs was first introduced to me via a Fedora version that had it as the default filesystem. At the time, it was especially brittle when it came to power outages. I ended up losing a system to one such use case. But a few years ago, I started using btrfs on my home directory. And even developed a program to manage snapshots. My two favorite features of btrfs are that Copy on Write (COW) allows me to make snapshots that only take up space when the file that was snapshot changes and the ability to dynamically set up and grow RAID levels. I was able to use this recently to get my photo hard drive on RAID1 without having to have an extra hard drive (because most RAID solutions destroy what’s on the drive).

btrfs scrub complete

This was the status at the end of the scrub:

[root@supermario ~]# /usr/sbin/btrfs scrub start -Bd /media/Photos/

scrub device /dev/sdd1 (id 1) done

scrub started at Tue Mar 21 17:18:13 2017 and finished after 05:49:29

total bytes scrubbed: 2.31TiB with 0 errors

scrub device /dev/sda1 (id 2) done

scrub started at Tue Mar 21 17:18:13 2017 and finished after 05:20:56

total bytes scrubbed: 2.31TiB with 0 errors

I’m a bit perplexed at this information. Since this is a RAID1, I would expect it to be comparing info between disks - is this not so? If not, why? Because I would have expected both disks to end at the same time. Also, interesting to note that the 1TB/hr stopped being the case at some point.

Speed of btrfs scrub

Here’s the output of the status command:

[root@supermario ~]# btrfs scrub status /media/Photos/

scrub status for 27cc1330-c4e3-404f-98f6-f23becec76b5

scrub started at Tue Mar 21 17:18:13 2017, running for 01:05:38

total bytes scrubbed: 1.00TiB with 0 errors

So on Fedora 25 with an AMD-8323 (8 core, no hyperthreading) and 24GB of RAM with this hard drive and its 3TB brother in RAID1 , it takes about an hour per Terabyte to do a scrub. (Which seems about equal to what a coworker told me his system takes to do a zfs scrub - 40ish hours for about 40ish TB)

Finally have btrfs setup in RAID1

A little under 3 years ago, I started exploring btrfs for its ability to help me limit data loss. Since then I’ve implemented a snapshot script to take advantage of the Copy-on-Write features of btrfs. But I hadn’t yet had the funds and the PC case space to do RAID1. I finally was able to implement it for my photography hard drive. This means that, together with regular scrubs, I should have a near miniscule chance of bit rot ruining any photos it hasn’t already corrupted.

Exploring Rockstor

I’ve been looking at NAS implementations for a long time. I looked at FreeNAS for a while then OpenMediaVault. But what I really wanted was to be able to take advantage of btrfs and its great RAID abilities - especially its ability to dynamically expand. So I was happy when I discovered Rockstor on Reddit. Here are some videos in which I explore the interface and how to work with Rockstor using a VM before setting it up on bare metal.

Post Script to yesterday's btrfs post

Looks like I was right about the non-commit and possibly also about the df -h.

Last night at the time I wrote the post:

# btrfs fi show /home

Label: 'Home1' uuid: 89cfd56a-06c7-4805-9526-7be4d24a2872

Total devices 1 FS bytes used 1.91TiB

devid 1 size 2.73TiB used 1.99TiB path /dev/sdb1

$ df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 3.9G 0 3.9G 0% /dev

tmpfs 3.9G 600K 3.9G 1% /dev/shm

tmpfs 3.9G 976K 3.9G 1% /run

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/sda3 146G 52G 87G 38% /

tmpfs 3.9G 296K 3.9G 1% /tmp

/dev/sda1 240M 126M 114M 53% /boot

/dev/sdb1 2.8T 2.0T 769G 73% /home

babyluigi.mushroomkingdom:/media/xbmc 1.8T 1.6T 123G 93% /media/nfs/xbmc-mount

babyluigi.mushroomkingdom:/fileshares 15G 6.5G 7.5G 47% /media/nfs/babyluigi

tmpfs 795M 28K 795M 1% /run/user/500

And today:

A Quick Update on my use of btrfs and snapshots

Because of grad school, my work on Snap in Time has been quite halting - my last commit was 8 months ago. So I haven’t finished the quarterly and yearly culling part of my script. Since I’ve been making semi-hourly snapshots since March 2014, I had accumulated something like 1052 snapshots. While performance did improve a bit after I turned on the autodefrag option, it’s still a bit suboptimal, especially when dealing with database-heavy programs like Firefox, Chrome, and Amarok. At least that is my experience - it’s entirely possible that this is correlation and not causation, but I have read online that when btrfs needs to figure out snapshots and what to keep, delete, etc it can be a performance drag to have lots of snapshots. I’m not sure, but I feel like 1052 is a lot of snapshots. It’s certainly way more than I would have if my program were complete and working correctly.

btrfs needs autodefrag set

When I first installed my new hard drive with btrfs I was happy with how fast things were running because the hard drive was a SATA3 and the old one was SATA2. But recently two things were bugging the heck out of me - using either Chrome or Firefox was painfully slow. It wasn’t worth browsing the web on my Linux computer. Also, Amarok was running horribly - taking forever to go from song to song.

Exploring btrfs for backups Part 6: Backup Drives and changing RAID levels VM

Hard drives are relatively cheap, especially nowadays. But I still want to stay within my budget as I setup my backups and system redundancies. So, ideally, for my backup RAID I’d take advantage of btrs’ ability to change RAID types on the fly and start off with one drive. Then I’d add another and go to RAID1. Then another and RAID5. Finally, the fourth drive and RAID6. At that point I’d have to be under some sort of Job-like God/Devil curse if all my drives failed at once, negating the point of the RAID. The best thinking right now is that you want to have backups, but want to try not to have to use them because of both offline time and the fact that a restore is never as clean as you hope it’ll be.

Exploring btrfs for backups Part 5: RAID1 on the Main Disks in the VM

So, back when I started this project, I laid out that one of the reasons I wanted to use btrfs on my home directory (don’t think it’s ready for / just yet) is that with RAID1, btrfs is self-healing. Obviously, magic can’t be done, but a checksum is stored as part of the data’s metadata and if the file doesn’t match the checksum on one disk, but does on the other, the file can be fixed. This can help protect against bitrot, which is the biggest thing that’s going to keep our children’s digital photos from lasting as long as the ones printed on archival paper. So, like I did the first time, I’ll first be trying it out in a Fedora VM that mostly matches my version, kernel, and btrfs-progs version. So, I went and added another virtual hard drive of the same size to my VM.

Exploring btrfs for backups Part 4: Weekly Culls and Unit Testing

Back in August I finally had some time to do some things I’d been wanting to do with my Snap-in-Time btrfs program for a while now. First of all, I finally added the weekly code. So now my snapshots are cleaned up every three days and then every other week. Next on the docket is quarterly cleanups followed up yearly cleanups. Second, the big thing I’d wanted to do for a while now: come up with unit tests! Much more robust than my debug code and testing scripts, it helped me find corner cases. If you look at my git logs you can see that it helped me little-by-little figure out just what I needed to do as well as when my “fixes” broke other things. Yay! My first personal project with regression testing!

Exploring btrfs for backups Part 3: The Script in Practice

Night of the second day:

# btrfs sub list /home

ID 275 gen 3201 top level 5 path home

ID 1021 gen 3193 top level 275 path .snapshots

ID 1023 gen 1653 top level 275 path .snapshots/2014-03-13-2146

ID 1024 gen 1697 top level 275 path .snapshots/2014-03-13-2210

ID 1025 gen 1775 top level 275 path .snapshots/2014-03-13-2300

ID 1027 gen 1876 top level 275 path .snapshots/2014-03-14-0000

ID 1028 gen 1961 top level 275 path .snapshots/2014-03-14-0100

ID 1029 gen 2032 top level 275 path .snapshots/2014-03-14-0200

ID 1030 gen 2105 top level 275 path .snapshots/2014-03-14-0300

ID 1031 gen 2211 top level 275 path .snapshots/2014-03-14-0400

ID 1032 gen 2284 top level 275 path .snapshots/2014-03-14-0500

ID 1033 gen 2357 top level 275 path .snapshots/2014-03-14-0600

ID 1035 gen 2430 top level 275 path .snapshots/2014-03-14-0700

ID 1036 gen 2506 top level 275 path .snapshots/2014-03-14-0800

ID 1037 gen 2587 top level 275 path .snapshots/2014-03-14-0900

ID 1038 gen 2667 top level 275 path .snapshots/2014-03-14-1700

ID 1039 gen 2774 top level 275 path .snapshots/2014-03-14-1800

ID 1040 gen 2879 top level 275 path .snapshots/2014-03-14-1900

ID 1041 gen 2982 top level 275 path .snapshots/2014-03-14-2000

ID 1042 gen 3088 top level 275 path .snapshots/2014-03-14-2100

ID 1043 gen 3193 top level 275 path .snapshots/2014-03-14-2200

Morning of the third day:

Exploring btrfs for backups Part 2: Installing on My /Home Directory and using my new Python Script

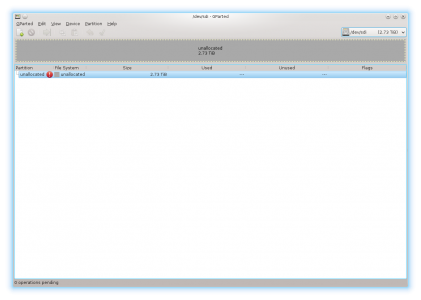

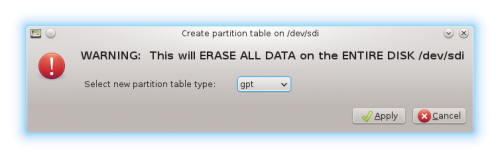

I got my new hard drive that would replace my old, aging /home hard drive. As you read in part 1, I wanted to put btrfs on it. This is my journey to get it up and running. Plugged it into my hard drive toaster and ran gparted.

[caption id=“attachment_7889” align=“aligncenter” width=“421”]  Gparted for new drive[/caption]

Gparted for new drive[/caption]

[caption id=“attachment_7890” align=“aligncenter” width=“500”]  Gparted for new drive1[/caption]

Gparted for new drive1[/caption]

Exploring btrfs for backups Part 1

Recently I once again came across an article about the benefits of the btrfs Linux file system. Last time I’d come across it, it was still in alpha or beta, and I also didn’t understand why I would want to use it. However, the most I’ve learned about the fragility of our modern storage systems, the more I’ve thought about how I want to protect my data. My first step was to sign up for offsite backups. I’ve done this on my Windows computer via Backblaze. They are pretty awesome because it’s a constant backup so it meets all the requirements of not forgetting to do it. The computer doesn’t even need to be on at a certain time or anything. I’ve loved using them for the past 2+ years, but one thing that makes me consider their competition is that they don’t support Linux. That’s OK for now because all my photos are on my Windows computer, but it leaves me in a sub-optimal place. I know this isn’t an incredibly influential blog and I’m just one person, but I’d like to think writing about this would help them realize that they could a) lose a customer and b) be making more money from those with Linux computers.