Exploring btrfs for backups Part 5: RAID1 on the Main Disks in the VM

By EricMesa

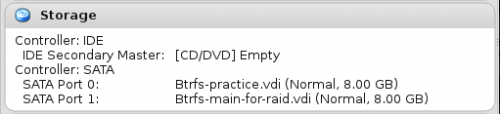

- 7 minutes read - 1443 wordsSo, back when I started this project, I laid out that one of the reasons I wanted to use btrfs on my home directory (don’t think it’s ready for / just yet) is that with RAID1, btrfs is self-healing. Obviously, magic can’t be done, but a checksum is stored as part of the data’s metadata and if the file doesn’t match the checksum on one disk, but does on the other, the file can be fixed. This can help protect against bitrot, which is the biggest thing that’s going to keep our children’s digital photos from lasting as long as the ones printed on archival paper. So, like I did the first time, I’ll first be trying it out in a Fedora VM that mostly matches my version, kernel, and btrfs-progs version. So, I went and added another virtual hard drive of the same size to my VM.

Next comes a part that I won’t be doing on my real machine because I don’t have root on the non-VM system I want to RAID1:

Next comes a part that I won’t be doing on my real machine because I don’t have root on the non-VM system I want to RAID1:

#>sudo sfdisk -d /dev/sda | sudo sfdisk /dev/sdb

[sudo] password for ermesa: [sudo] password for ermesa:

sfdisk: Checking that no-one is using this disk right now ...

sfdisk: OK

Disk /dev/sdb: 1044 cylinders, 255 heads, 63 sectors/track

sfdisk: /dev/sdb: unrecognized partition table type

Old situation:

sfdisk: No partitions found

Sorry, try again.

New situation:

Units: sectors of 512 bytes, counting from 0

Device Boot Start End #sectors Id System

/dev/sdb1 * 2048 1026047 1024000 83 Linux

/dev/sdb2 1026048 2703359 1677312 82 Linux swap / Solaris

/dev/sdb3 2703360 16777215 14073856 83 Linux

/dev/sdb4 0 - 0 0 Empty

sfdisk: Warning: partition 1 does not end at a cylinder boundary

sfdisk: Warning: partition 2 does not start at a cylinder boundary

sfdisk: Warning: partition 2 does not end at a cylinder boundary

sfdisk: Warning: partition 3 does not start at a cylinder boundary

sfdisk: Warning: partition 3 does not end at a cylinder boundary

Successfully wrote the new partition table

Re-reading the partition table ...

sfdisk: If you created or changed a DOS partition, /dev/foo7, say, then use dd(1)

to zero the first 512 bytes: dd if=/dev/zero of=/dev/foo7 bs=512 count=1

(See fdisk(8).)

OK, so now I have to install grub. Again, I wouldn’t do this on SuperMario, but since the VM has btrfs on the whole system, I’m going to do it here.

#>sudo grub2-install /dev/sdb

Installation finished. No error reported.

Excellent. Now the btrfs-specific parts.

#> sudo btrfs device add /dev/sdb1 /

Before the (hopefully) last step, let’s see what this gives us in the current btrfs filesystem:

#>sudo btrfs fi show

Label: 'fedora' uuid: e5d5f485-4ca8-4846-b8ad-c00ca8eacdd9

Total devices 2 FS bytes used 2.82GiB

devid 1 size 6.71GiB used 4.07GiB path /dev/sda3

devid 2 size 500.00MiB used 0.00 path /dev/sdb1

Oh, this allowed me to catch something. It should have been sdb3 before not 1. Let me see if this fixes things.

#>sudo btrfs device delete /dev/sdb1 /

#> sudo btrfs fi show

Label: 'fedora' uuid: e5d5f485-4ca8-4846-b8ad-c00ca8eacdd9

Total devices 1 FS bytes used 2.82GiB

devid 1 size 6.71GiB used 4.07GiB path /dev/sda3

OK, good. That appears to have put us back where we started. Let me try the correct parameters this time.

#>sudo btrfs device add /dev/sdb3 /

#> sudo btrfs fi show

Label: 'fedora' uuid: e5d5f485-4ca8-4846-b8ad-c00ca8eacdd9

Total devices 2 FS bytes used 2.82GiB

devid 1 size 6.71GiB used 4.07GiB path /dev/sda3

devid 2 size 6.71GiB used 0.00 path /dev/sdb3

Much better. See that both devices are the same size? Good. A df shows me that /boot is on sda1. So I’m not 100% convinced we’ll end up with a system that can boot no matter which hard drive fails. I’m not going to worry about that since in SuperMario I’ll just be doing a home drive, but you may want to check documenation if you’re doing this for your boot hard drive as well. Time for the final command to turn it into a RAID 1:

#>sudo btrfs balance start -dconvert=raid1 -mconvert=raid1 /

That hammers the system for a while. I got the following error:

ERROR: error during balancing '/' - Read-only file system

I wonder what happened. And we can see that it truly was not balanced.

#>sudo btrfs fi show

Label: 'fedora' uuid: e5d5f485-4ca8-4846-b8ad-c00ca8eacdd9

Total devices 2 FS bytes used 2.88GiB

devid 1 size 6.71GiB used 5.39GiB path /dev/sda3

devid 2 size 6.71GiB used 3.34GiB path /dev/sdb3

Hmm. Checking dmesg shows that it’s a systemd issue. I’ll reboot the VM in case the filesystem ended up in a weird state. It has definitely been acting a bit strange. The fact that it doesn’t want to reboot isn’t encouraging. Since it’s just a VM, I decide to go for a hard reset. When I tried to run it again, it said operation now in progress. I guess it saw that it wasn’t able to complete it last time? I’m not sure. If so, that’d be awesome. And maybe that’s why the reboot wouldn’t happen? But it gave me errors, so that’s a bit unintuitive if that’s what was going on.

Here’s what dmesg showed:

[ 224.975078] BTRFS info (device sda3): found 12404 extents

[ 243.538313] BTRFS info (device sda3): found 12404 extents

[ 244.061442] BTRFS info (device sda3): relocating block group 389611520 flags 1

[ 354.881373] BTRFS info (device sda3): found 14154 extents

[ 387.088152] BTRFS info (device sda3): found 14154 extents

[ 387.450010] BTRFS info (device sda3): relocating block group 29360128 flags 36

[ 404.492103] hrtimer: interrupt took 3106176 ns

[ 417.499860] BTRFS info (device sda3): found 8428 extents

[ 417.788591] BTRFS info (device sda3): relocating block group 20971520 flags 34

[ 418.079598] BTRFS info (device sda3): found 1 extents

[ 418.832913] BTRFS info (device sda3): relocating block group 12582912 flags 1

[ 421.570949] BTRFS info (device sda3): found 271 extents

[ 425.489926] BTRFS info (device sda3): found 271 extents

[ 426.188314] BTRFS info (device sda3): relocating block group 4194304 flags 4

[ 426.720475] BTRFS info (device sda3): relocating block group 0 flags 2

So it does look like it’s working on that. When it was done, I had:

#>sudo btrfs fi show Label: ‘fedora’ uuid: e5d5f485-4ca8-4846-b8ad-c00ca8eacdd9 Total devices 2 FS bytes used 2.83GiB devid 1 size 6.71GiB used 3.62GiB path /dev/sda3 devid 2 size 6.71GiB used 3.62GiB path /dev/sdb3

It’s encouraging that the same space is taken up on each drive. But how to confirm it’s RAID1? The answer is found in btrfs’ version of df. btrfs needs its own version because, as of right now, a lot of what makes it an awesome COW filesystem means that the usual GNU programs don’t truly know how much free space you have. So, let’s try it:

#>sudo btrfs fi df /

Data, RAID1: total=3.34GiB, used=2.70GiB

System, RAID1: total=32.00MiB, used=16.00KiB

Metadata, RAID1: total=256.00MiB, used=133.31MiB

unknown, single: total=48.00MiB, used=0.00

I’m slightly nervous about the “unknown” entry - but a quick Google shows that it’s no big deal.

3.15 has this commit, it's the cause of the unknown. We'll roll the progs patch into the next progs release, but it's nothing at all to worry about. -chris Author: David Sterba <dsterba <at> suse.cz> Date: Fri Feb 7 14:34:12 2014 +0100 btrfs: export global block reserve size as space_info Introduce a block group type bit for a global reserve and fill the space info for SPACE_INFO ioctl. This should replace the newly added ioctl (01e219e8069516cdb98594d417b8bb8d906ed30d) to get just the 'size' part of the global reserve, while the actual usage can be now visible in the 'btrfs fi df' output during ENOSPC stress. The unpatched userspace tools will show the blockgroup as 'unknown'. CC: Jeff Mahoney <jeffm <at> suse.com> CC: Josef Bacik <jbacik <at> fb.com> Signed-off-by: David Sterba <dsterba <at> suse.cz> Signed-off-by: Chris Mason <clm <at> fb.com>

So, there you go, relatively simple to setup a RAID1 on a btrfs system. Took just under an hour - but it was only 3 GB to balance. Larger drive takes longer (which is why RAID6 is better as you can have another drive fail while you are balancing in your replacement drive) The best thing is that it all runs on a live system so you don’t need to suffer being unable to use the computer while the balance runs. Again, if you’re doing this on your boot drive, use Google to confirm that the /boot and all that is setup correctly or you won’t quite have the redudancy protection you think you do. Next time’s going to get a bit interesting as I simulate what I want to do with my backup btrfs hard drives. After that it’ll either be more Snap-In-Time code or my live migration to RAID1 on my home btrfs subvolume.